About Me

I am an Assistant Professor of Electrical Engineering and Computer Science at New York University. Previously I worked as a senior research scientist at Meta Reality Labs. I received my PhD degree of Computer Science at the Harvard University, and my bachelor's degree and master's degree in Electrical Engineering and Statistics from University of Toronto.

My chinese name is 张赛骞.

I am recruiting PhD students with backgrounds in AI and computer architecture. If you are interested, please reach out to sailabapplication@gmail.com.

Research Overview

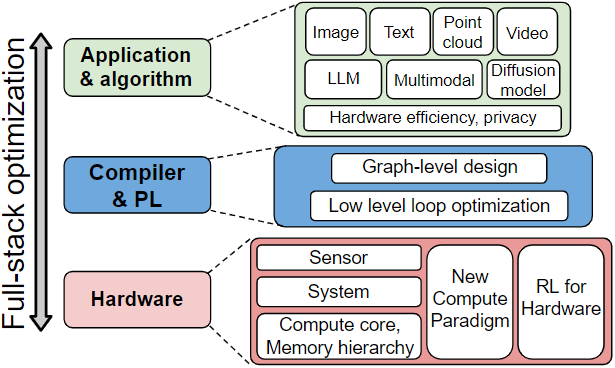

I am a researcher whose work lies on the boundary between deep learning, AR/VR and hardware system design. I am passionate about designing optimized AI algorithms and efficient hardware implementations for AR/VR computing.

Hardware Architecture:

-

Domain-specific accelerator for compute-intensive AI applications, new computation paradigm for DNN

-

Recent interest: AI accelerator for efficient AR/VR

Application & Algorithm:

-

Efficient DNN computing, pruning, quantization, NAS

-

Recent interest: Efficient LM KV caching, LM quantization, AI Privacy for AR/VR

Others:

-

Multi-agent reinforcement learning and its application

-

AI compiler

News

[02/2026] One paper with my collaborators got into CVPR'26!

[02/2026] One paper with my collaborators got into EIN workshop at IEEE INFOCOM'26.

[01/2026] Two papers got accepted by ICLR'26, see you in Brazil!

[01/2026] I am serving as TPC co-chair for ISLPED 2026, please consider submitting your strong work there!

[01/2026] I delivered a invited talk at University of Toronto.

[01/2026] One paper with my collaborators got accepted by CICC 2026!

[01/2026] One paper with my student got accepted by ECAL 2026!

[12/2025] We got support from Andes Technology, thanks Andes!

[12/2025] We will be organizing a workshop on visual computing at ASPLOS 2026: more details to come!

[12/2025] Our proposal got supported by NSF National Artificial Intelligence Research Resource Pilot, thanks NSF!

[11/2025] One paper got accepted at DATE'26!

[10/2025] One paper from my student got accepted at ASPLOS'26!

[10/2025] I was selected as top reviewer of NeurIPS 2025.

[9/2025] Three papers got accepted in NeurIPS'25, with one accepted as Spotlight!!

[8/2025] One paper got accepted in EMNLP'25!

[7/2025] One paper of my PhD student got accepted in MICRO-58!

[7/2025] One paper got accepted in COLM'25!

[7/2025] One paper got accepted in ACM MM'25!

[6/2025] One paper got accepted in TMLR' 25!

[6/2025] I presented our POLO paper at ISCA' 25!

[6/2025] I gave a talk on AR/VR computing at ISCAS' 25!

[5/2025] One paper with my collaborator got accepted in ICIP' 25!

[5/2025] One paper with my collaborator and my students got accepted in ACL' 25!

[4/2025] My PhD student, Tianhua Xia, has been awarded 2025 DAC Young Fellow!

[4/2025] My master student, Wenxuan Liu, has been awarded 2025 ECE Theodor Tamir Award for the Best MS Research!

[4/2025] My master student, Zhenyuan Dong, has been awarded 2025 ECE Myron M. Rosenthal Award for Best MS Academic Achievement!

[4/2025] Our paper weith my intern and PhD students on incremental gaze-tracked foveated rendering got accepted at ICS' 25!

[3/2025] Our paper on efficient gaze-tracked foveated rendering for virtual reality got accepted at ISCA' 25!

[3/2025] I delivered a seminar talk on ARVR computing at Stevens Institute of Technology!

[3/2025] I presented our paper at IEEE VR'25!

[3/2025] Check our our latest survey on speculative decoding! This survey covers almost all the current research literature on speculative decoding strategies, ranging from advanced algorithms, system implementation and its applications on other domains.

[2/2025] Our paper on efficient segmentation in augmented reality got accepted at CVPR'25!

[2/2025] One paper with my intern student and PhD student got accepted at ASPLOS'25!

[2/2025] I gave a talk at EI 2025 on hardware and software codesign for AR/VR computing.

[1/2025] One paper with my master student got accepted at IEEE VR'25 as a journal paper!

[12/2024] I gave a talk to Boston Fusion Corp.

[12/2024] Serving as TPC for ISLPED'25, please consider to submit your work there.

[11/2024] One paper with my intern students got accepted at DATE'25!

[11/2024] I will co-organize a workshop on AR/VR Computing at ASPLOS'25!

[10/2024] One paper got accepted at WACV'25!

[10/2024] Our survey on Parameter efficient finetuning is accepted by Transactions on Machine Learning Research (TMLR)!

[10/2024] I am serving as a PC of DAC'25 and ISCA'25, please consider to submit your great work there!

[10/2024] One paper got accepted at IEDM'24!

[9/2024] One paper got accepted at EMNLP'24!

[9/2024] I received a gift fund from Meta. Thank you Meta!

[9/2024] One paper got accepted at ASPDAC'25!

[8/2024] Serving as the session chair of ISLPED'24.

[7/2024] One paper got accepted at MLCAD'24!

[7/2024] I am serving as a PC of HPCA'25.

[6/2024] I delivered a talk on Efficient LLM and Accelerator Design to Andes Technology.

[6/2024] One paper get accepted at ICPP'24!

[5/2024] Our paper on AR/VR system simulation got accepted at ACM TODAES!

[5/2024] Two papers get accepted at ISLPED'24!

[4/2024] Check our our latest survey on Parameter Efficient Fine-tuning (PEFT) for Large Models. This work is done with my talented intern students. From algorithm design to hardware efficiency and system implementation, this comprehensive survey covers multiple aspects of PEFT research nowadays.

[3/2024] One paper with my intern student wenshuo, got accepted in NAACL'24!

[2/2024] Two papers accepted in ISQED'24.

[11/2023] Serving as TPC for DAC'24 and ISQED'24.

[10/2023] Our paper "Co-Designing AI Models and DRAMs for On-Device Training" is accepted by HPCA 2024!

[9/2023] Our paper on efficient reinforcement learning, which I co-authored with my high school mentee, Gavin An, has been accepted for publication in JEI.

[6/2023] I gave two talks on DNN hardware and algorithm codesign at Tsinghua University and Peking University.

[5/2023] Our paper "Co-Designing AI Models and DRAMs for On-Device Training" is submitted to Arxiv. This paper proposes an algorithm/hardware codesign solution for efficient on-chip transfer learning which completely eliminates the off-chip DRAM traffic during the training process.

[3/2023] My high school mentee, Gavin An, has successfully finished his AI project on efficient reinforcement learning. A paper got accepted in JEI!

Collaborators